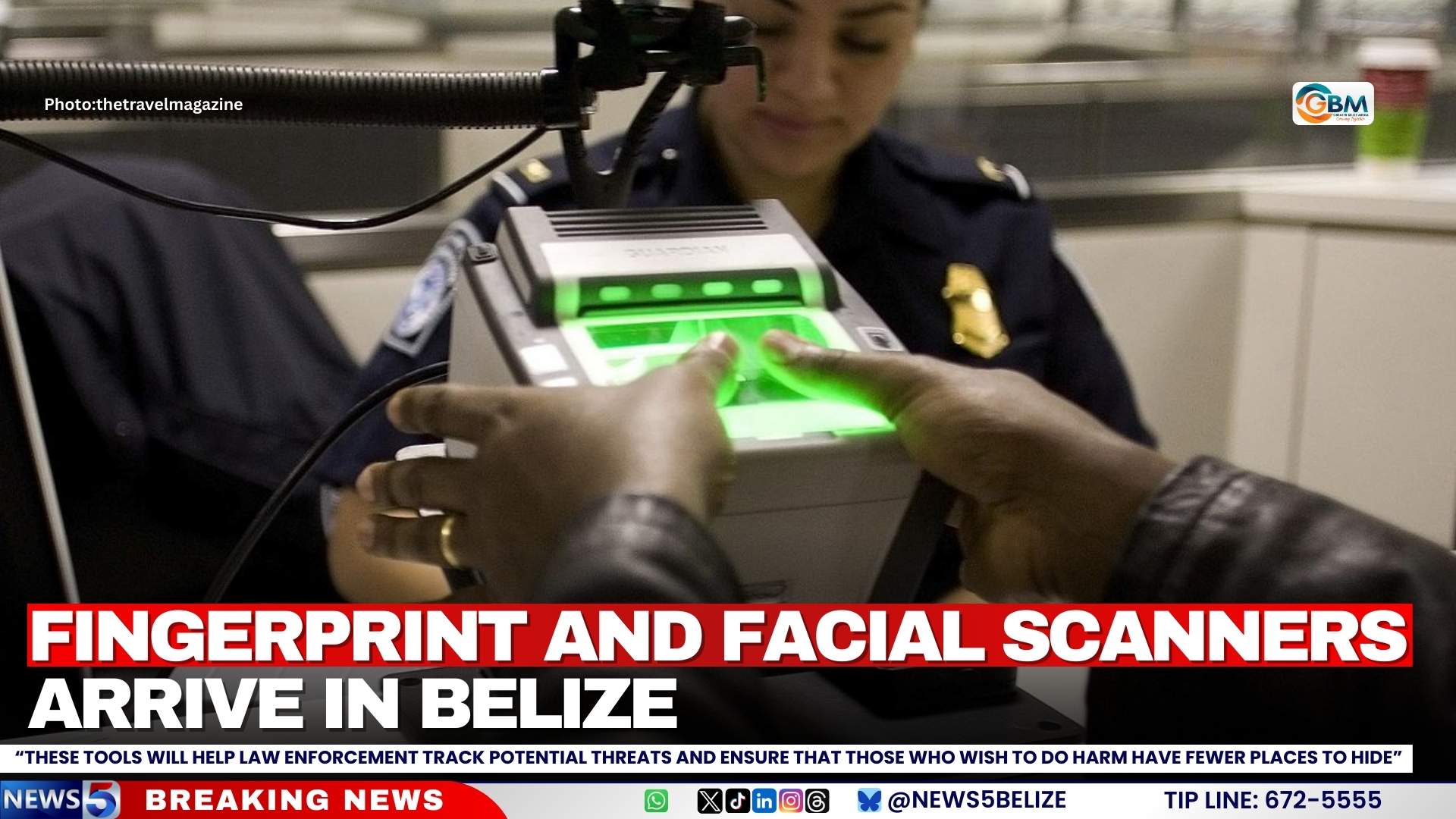

Belize has entered a new era of border security with the formal inauguration of an advanced biometric screening system at its airports and key border checkpoints. This strategic initiative, developed in collaboration with the United States, represents a significant technological upgrade to the nation’s immigration infrastructure.

The newly deployed Biometric Data Sharing Programme incorporates state-of-the-art fingerprint scanners and facial recognition technology to enhance border protection mechanisms. The system is designed to simultaneously strengthen national security protocols while streamlining the travel experience for both Belizean citizens and international visitors.

Home Affairs Minister Oscar Mira emphasized the dual benefits of the program during the launch ceremony. “These advanced technological tools will empower our law enforcement agencies to identify potential threats with unprecedented efficiency,” Minister Mira stated. “We are creating an environment where those with malicious intent find diminishing opportunities to exploit our systems.”

The minister further highlighted that the initiative reflects the mutual commitment of both nations to principles of transparency, accountability, and legal governance. The program also signifies the deepening partnership between Belize and the United States in matters of security and technological advancement.

Implementation of the sophisticated biometric system will be managed by the Department of Border Management and Immigration Services in coordination with the Ministry of Immigration, Governance and Labour. Officials anticipate that the professionalism of these agencies will ensure the technology operates at optimal effectiveness while maintaining civil liberties.

This technological deployment positions Belize among the growing number of nations adopting biometric solutions for enhanced border security and more efficient migration management systems.